CSE 575 SVM Exercise Questions

In this post, I will go over the SVM-related questions we have dicussed about last Friday. A scanned version of all the 6 questions is available here. Please refer to it while you are skimming through this post. For obvious reasons, Q3 is let go since it’s related to Logistic Regression.

Q1 and Q2 - Understanding the Boundary

For the linearly separable SVM, recall that you are supposed to find such a hyperplane so that it devides all data points in the training set into two classes .

And its classifier function

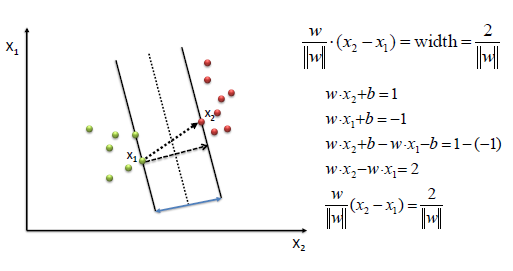

We want to maximize the gap, which is equivalent to minimizing , where the so-called support vectors are the sample points that sit right on the ‘river banks’, as such points satisfy

And a large C penalty coefficient simply means to make sure the gap width is as large as possible.

Q4 ~ Q6 - The Kernel Trick and RBF

Just in case you lose track of the questions, here they are.

Kernel functions are not projection. The s are. Kernel functions return scalar values.

The s take care of projecting your original data points x’s to a higher dimension feature space, but we don’t need to know(and it’s hard to define) what s exactly are. Remember that kernel function regarding two vectors is equal to an implicit dot product that

And in Q4 you are supposed to represent all s in the format of K’s.

For Q5 and Q6, given the RBF kernel , we can easily get that:

Since is a monotonic projection of the Euclidean distance . This means for whatever that is the closest to in the lower-dimensional input space, its projection is still the closest to the projected in the higher-dimensional feature space.