CSE 575 Logistic Regression, Intuition

This post is going to share some of my own thoughts on the concept of logistic regression. Notice it is not going to be superbly rigid, but rather I’d like build up some intuition.

Understanding the hypothethis

First of all, logistic regression comes hand in hand with linear regression.

Inspired by UCI Wine dataset, let’s imagine we want estimate the price of each single bottle of wine given its feature stats (e.g. alcohol, color intensity, and such). We assume the result price depends on a linear relationship regarding each feature, and we’d like to use such a linear function so that we can estimate the price given a single wine datum in future. Intuitively, we can write down this weighted sum equation given a n-feature bottle data :

where is the bias, or essentially the base price for each bottle. Each single scalar weight give outs which feature matters more to affect the final price of the wine.

To put it more generalized, we name the aforementioned weight sum a linear regression hypothesis, and the weights its parameters. The hypothesis offers a specific measure to describe your data tuned by the parameters.

Projecting the hypothethis

We use logistic regresssion to tackle classification. In a simple scenario, we only consider 0-1 classification/binomial classification.

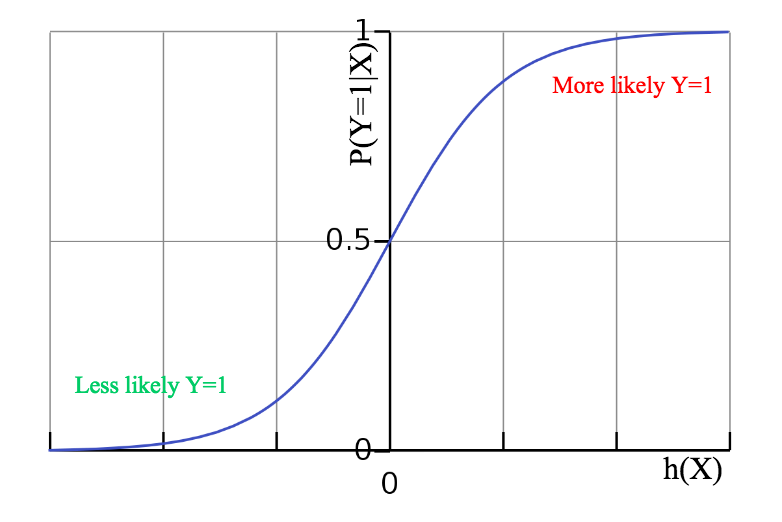

What does the s-shaped function mean? The logistic function, or the function projects the continuous x fairly into the range of , meanwhile the fucntion is differentiable. So if we can tune the hypothesis’ parameters well enough, we may eventually have the hypothesis aligned with the classification probabilities. In other words, the linear hypothesis result can be projected as a probability of a class given the data.

Thus the logistic hypothethis can be understood as a layer of projection above corresponding to the linear regression hypothesis.

where

Hence the logistic regression hypothesis g(X)

and on the other hand

So to put it into a graph

We can tell if the linear hypothethis passes some threshold, the given datum X will be more likely classified as Y=1.

How to estimate the weights/parameters

Similarly, in order to find the optimal weights, we may still solve for the maximum likelihood. In this binomial scenario, given a n-feature observation/dataset of where and , the likelihood is

We are more willing to solve for the log-likelihood so that we can transfer the product into a sum

Now we can solve or by bringing in (1) and (2) and taking partial derivatives.